Meet Olli: Fusion of Autonomous Electric Transport, Watson IoT & 3D Printing

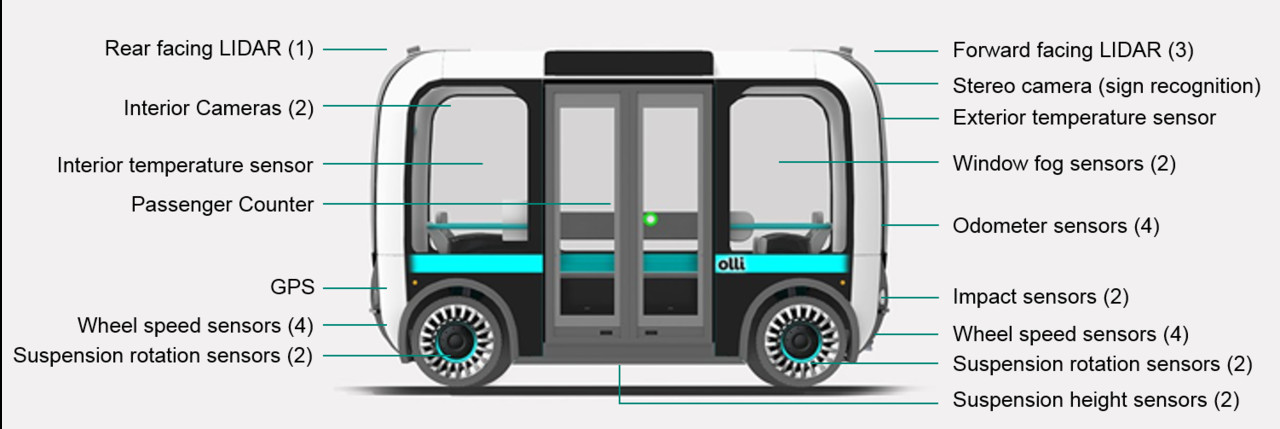

On June 16th, 2016, Arizona-based startup Local Motors in partnership with IBM in National Harbor, MD launched Olli, a 12-seater, autonomous neighborhood mobility solution. It can be summoned via a mobile app, and you can interact with it via natural language when in the vehicle or nearby all using IBM Watson technologies. It will be monitored by a human overseer remotely, and drives autonomously using a package of sensors (see picture above). A few cities like Miami-Dade county, Berlin are exploring pilots with Olli. You can expect Ollis in (university or work) campuses, large private estates, theme parks, local communities (eg: Olli school buses, last (few) mile connectors to metro lines, retirement villages) as well.

The idea of a pod-like vehicle is not new (eg: in Heathrow airport, London or Masdar City, Abu Dhabi). However an autonomous pod that can work on existing (not greenfield) infrastructure such as city / campus roads, and co-existing with existing traffic in an autonomous manner is novel.

The flagship vehicles at Local Motors have involved a open design challenge (called co-creation) where community participants designed the car and the winner had a prize (and sometimes had their name acknowledged on each vehicle). The Strati was designed by Michele Anoè and the LM3D was designed by Kevin Lo, both members of the Local Motors community. The vehicle was designed by Edgar Sarmiento, initially named the "Berlino" from the Urban Mobility Challenge: Berlin 2030 (see below).

3D Printing & Autonomous Technology

Local Motors takes the design from its community, refines them for Direct Digital Manufacturing (DDM). The company envisions local micro-factories to make small volumes of cars. DDM is the fabrication of components in a seamless manner from computer design to actual manufactured part. DDM can incorporate 3D printing (which is usually additive manufacturing), but "Direct digital manufacturing" and "Rapid prototyping" can mean either additive or subtractive methods (like laser cutting, CNC sawing). Deposition processes involve material deposited in successive thin layers from a “print head” where the “ink” may be a melted polymer, or a dense suspension of particles (metal, ceramic, or polymer).

How does DDM work? Consider creating a coffee cup. An old-style craftsman might slowly shape a piece of clay by hand into a handmade mug. Designers and machinists in a factory would build a series of metallic molds and then create multiple tools to mill metal into the key components of the cup which would then be assembled on a production line, often through welding. By contrast, a DDM designer would create a digital 3D model of the cup, then turn production over to the computer, which would digitally slice it into a stack of wafer-thin layers, print them out, and fuse them together. Reality is a little more complex. Below is a video on 3D Printing 101 on how to print a car (courtesy: Local Motors)

Local motors created waves in 2014 by 3D printing a road-worthy car, the Strati. The printing took 44 hours to complete, and remarkably was witnessed by a live audience at the 2014 International Manufacturing Technology Show (ISAM) in Chicago. The car consists of 50 parts, far less than a traditional vehicle (~30,000 parts). In order to 3-D print Olli, Local Motors used the same process as it did for its LM3D Swim, the first 3D printed car. The video below (from Business Insider) shows how a 3D printed car like the Strati is done:

For Olli, Local Motors used Big Area Additive Manufacturing (BAAM) 3D printing technology co-developed by Cincinnati Inc and Oak Ridge National Laboratory. At a rate of 100 pounds/hour, Olli can be printed in about 10 hours, and assembled in another hour, about the time it takes for a regular car to be built end-to-end. While the pipeline benefits of the traditional production line are not there, multiple micro-factories can stamp out lower volumes locally.

The Carbon Fiber Reinforced Polymer (CFRP) used for the vehicle is 80 percent acrylonitrile butadiene styrene (ABS) and 20 percent carbon fiber. Between a quarter and a third of the vehicle was 3-D printed, including the shape that was used to create the mold for Olli. The buy-to-fly ratio which measures how much material is wasted (eg: metal or CFRP etc) is also attractive with 3D printing (close to 1 vs a double digit number in auto manufacturing). This has an impact on the embedded energy in the vehicle. Also the material in the car can be recycled into future 3D printed objects. Note that the engine etc are not 3D printed.

Phoenix Wings supplies the autonomous drive system that physically controls Olli. Their self-driving system is the interface to sensors throughout the vehicle and controls all of Olli’s functions. The sensors are also networked by IBM Watson IoT to the cloud. A combination of "cloud" IoT and "edge" IoT machine learning components combine to provide safety and enjoyable experiences. The Olli ecosystem (i.e. fleet management + mobile app) is shown below. While initial versions will operate on pre-defined routes, in the near future Olli can be summoned like Uber via the app.

And, oh, Olli has a powertrain with electric drive as well, i.e. it is also an EV. While there is a regular electric charging cord, in the future recharging is expected to happen when it stops to onboard / offboard passengers via inductive charging (currently it has a 40 mile range).

Cognitive IoT and IBM Watson:

For the natural human interaction, the platform leverages four Watson developer APIs such as Speech to Text, Natural Language Classifier, Entity Extraction and Text to Speech to enable seamless interactions between the vehicle and passengers. An outline of an example interaction & API flow is shown below. Users can ask queries that are about destinations (“Olli, can you take me downtown?”), informational (Olli, How are you able to self-drive?), Orders (Olli, take me to work!), Operational (What's the temperature here?), Vehicle oriented (What is our speed? Why did you stop?), Local interests (Where is the best pizza place nearby?), Rider surveys (What do you like? How can I improve?). A wide variety of querying capabilities are being rolled out in stages.

Watson Speech to text (STT) understands language from passengers. Watson interprets questions and converts them to text for processing. Natural Language Classifier (NLC) interprets the intent behind text and returns a corresponding classification with associated confidence levels. Entity Extraction identifies named entities in text that specifies things such as persons, places and organizations. Text to speech (TTS) takes text bases responses from Watson and converts to speech to respond to passenger inquiry. Watson Conversation API adds a natural language interface to applications to automate interactions with end users.

These are just the beginning. As with Tesla cars, remote updates of the fleet with new cognitive IoT capabilities will happen in a continuous manner. Olli also enables new business models in partnership with local communities, cities, campuses. Here is a picture of IBM General Manager, Harriet Green, interacting live with Olli in IFA conference in Berlin in September 2016. The full video is here.

Summary

Autonomous transportation is clearly coming. Tesla, Google, Uber and all major car companies, and maybe even Apple (?) are moving rapidly towards it. Beyond the use case of helping drivers reduce their stress, and improving safety in roads compared to human drivers, the fleet / public transportation possibilities are enormous. Autonomous matches very well with shared / public transportation. For example, even in an individually driven vehicle, after dropping off one person, the vehicle can (slowly) move to serve another (and not require a parking spot). With Olli, it appears to have a compelling size (not too small or large), can hit the right economics (given the local / scale of manufacturing), be flexible and drive 10-50X improvement in transportation densities (10X due to 10-12 people per Olli, and another 5X due to sharing of the vehicle) complementing existing public transportation and road investments.

Since such transportation is for humans, the human touch and natural interface w/ Olli via Watson technology is a brilliant touch. The capabilities of natural interaction are just the beginning; interacting w/ social, mobile and IoT devices in the neighborhood via the Olli app will provide a range of future possibilities. Mobile payments etc will also be a cinch - just the entry / exit points can be recorded in the app, and automatically debited on your account (no swiping etc).

I am looking forward to Olli on the tech campuses in India as well! Oh, and also 3D printed scooters and novel designs for the roads and challenges of Indian transportation. The challenge is also to get it at lower cost. The Olli model of doing this for a group transportation to hit the economic sweet spot, seems like a good starting point.

Twitter: @shivkuma_k

ps: Material in part from several open sources, including WikiPedia, Local Motors, IBM Watson IoT AutoLAB, the Verge, AutoBlog, 3dprintingindustry.com etc

pps: List of all my LinkedIn Articles

STT and TTS for our local local languages will be helpful to make things good for ibm watson in factory floor

Regeneration 🌱 | Energy Transitions ⚡ | ❤️ Social Impact | 🤝 Partnerships

3yAt SPACE10, we designed seven autonomous vehicles as a visual exploration of how fully autonomous vehicles could one day enable a more fulfilling, everyday life. The playful research looked at seven applications of autonomous vehicles beyond transport. https://space10.com/project/spaces-on-wheels-exploring-a-driverless-future/

Architect Signal and Image Processing, Sensor Vision, AI/ML/DL, and Azure Cloud Technologies

7yWelcome Olli, get stress tested in Bangalore!

Shiv ji . name also interesting ( Olli...means thin ). Does it use PPS ( precise position data) or just use GPS data for navigation and control?

APAC Head of Technology Solutions (Partner Ecosystem), Google Cloud at Google | Singapore Computer Society Cloud Chapter

7yAlways love reading your posts and this one is equally insightful and interesting!